Poker Neural Network Example

How PokerSnowie was born

Online poker has grown tremendously over the past decade, opening up the thrill of the game to more people. We feel that everybody should get the chance to become a world-class poker player. We believe that giving players access to software that teaches how to play in an optimal way will develop interest for the game long-term and in a responsible way.

Rather than following the common trend of developing yet another 'strategy' to expose and exploit weaknesses of other players, we wanted to come up with a range of educational tools based on a balanced and non-exploitative approach. The aim was to provide every kind of player with the tools they need to significantly improve their level of play, balance their play to become immune to exploitation by other players, increase their profits and rekindle their passion for poker.

Good examples are 5, where a poker classification system was built that makes decisions based. TD-Gammon is a neural network that is able to teach itself to play backgammon solely by playing. In this work, artificial neural networks are used to classify five cards from a standard deck of 52 by poker rules. Data for training and testing the designed networks can be found at UCI dataset page 2, a similar data set is used in 3 and also in 4 for a tutorial. The networks are designed with the aid of MATLAB’s Neural Networks Toolbox.

We had already applied this approach to backgammon, a similarly complex and skill-orientated game with some elements of luck, in the form of BackgammonSnowie, with fantastic results: a significant increase in overall proficiency within the backgammon community and a chance for everybody to become a world-class player.

Poker's growing popularity and concurrent sense of unrest drew us towards our next logical step: the development of an AI-based world-class No Limit Hold'em poker player, focusing on a balanced and un-exploitative play, with a view to training and coaching any player in long term winning poker strategy, and working to raise overall proficiency and enjoyment in the greater poker community.

Thus PokerSnowie was born.

PokerSnowie's Poker Knowledge

PokerSnowie is artificial intelligence-based software for No Limit Hold'em Poker. It has learned to play No Limit, from heads-up games to full ring games (10 players), and knows how to play from short stacks all the way up to very deep stacks (400 big blinds).

PokerSnowie's base

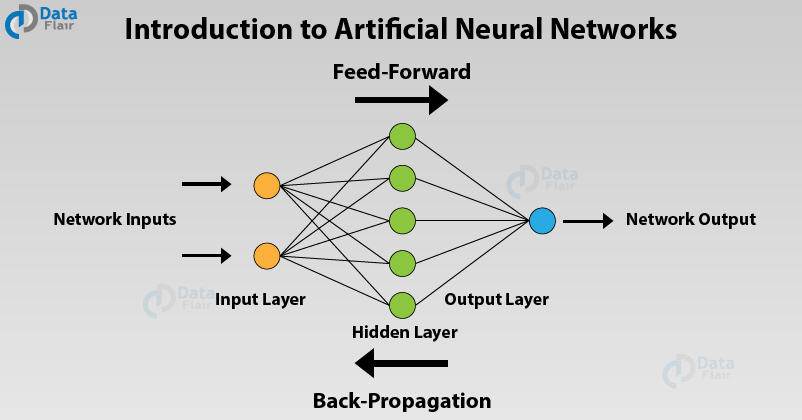

PokerSnowie is based on artificial neural networks. Those neural networks are a mathematical model based on biological neural networks, like those found in the human brain. While biology is far from explaining exactly how the neurons in the brain work and how learning takes place, some principles can be expressed as mathematical formulas, resulting in artificial neural networks.

The idea for artificial neural networks first came about in 1943 (Warren McCulloch and Walter Pitts) and a lot of applications nowadays use them very successfully. Using neural networks has become standard in many areas of the industry. However, the challenge was to design a learning algorithm for the game of Poker, which is a very complex multi-player game with hidden information. While for the specific variant of fixed limit heads-up the computer is known to be just as good as the best professional Poker players, nobody could create strong artificial intelligence-based software for the most popular (and most complex) variant: No Limit full ring game. This goal has now been achieved with the release of PokerSnowie.

PokerSnowie's initial training

To begin with, PokerSnowie played completely at random. After each hand played, successful betting lines were reinforced and unsuccessful moves learned from and reduced. For example, a call with a low high card hand on the river is mostly a losing situation, so PokerSnowie would call less and less with such hands, whereas trips win most of the time, so calling in this situation was reinforced.

Many people are surprised that computers can learn something 'psychological' like a bluff. In fact, this is one of the first things PokerSnowie learned. If a bluff is often successful in certain situations, bluffing is reinforced and PokerSnowie bluffs more often.

No expert knowledge

There is no expert knowledge built into PokerSnowie's strategy. This proved to be a disadvantage at the start of training: strong hands like a full house were randomly played and even folded. Playing quads or straight flushes was especially difficult to learn because it's very rare to hold those hands. Here, a big difference can be seen between human learning and PokerSnowie's learning: a human would know that quads is a very strong hand that wins almost every time. This leads to the obvious conclusion that quads should never be folded. PokerSnowie, however, sees a hand that it doesn't know and with which it has very little experience. Only slowly did it adapt its strategy with these hands in the right direction. Of course it's easy for the neural network to learn how to play these kinds of hands, but it takes time.

On the other hand, giving PokerSnowie the complete freedom to learn whatever it thinks is best has extraordinary advantages. If an expert were to stipulate parts of the strategy, those parts could not be improved by PokerSnowie, even if the expert's strategy proved to be wrong. The beauty of the expert-free approach is that PokerSnowie can become a much better poker player than the programmers and also better than humans in general!

PokerSnowie on the way to the ultimate balanced game

After the initial phase of training, PokerSnowie had learned the basics: folding bad hands, calling with good hands, raising as a bluff and for value. PokerSnowie's strategy already ranked alongside good poker players. However, the strategy was still quite unbalanced. In some situations, for example, it would bluff way too much, which could easily be exploited by an attentive opponent, who could easily call with weaker hands or raise back and score a nice profit against PokerSnowie in the long run.

Poker Neural Network Examples

The next and most extensive phase of the training was about getting the balance right. PokerSnowie constantly played against adapting agents that tried to exploit PokerSnowie's strategy as much as possible. If, for example, PokerSnowie bluffed too little, the agents would start calling less and therefore pay off PokerSnowie's good hands less. If PokerSnowie bluffed too much, the agents would start calling more and re-raise more aggressively. PokerSnowie tried to defend against those agents by constantly changing its hand ranges. This practice of adapting and learning is an on-going process which continually improves PokerSnowie's balance and robustness, making it difficult for agents to find exploitable leaks in PokerSnowie's play.

PokerSnowie will periodically release updates to its core AI brain. These version updates incorporate new learning and experience gained from training on large computer clusters and using refined algorithms. The ultimate result of all this ongoing work is that over time PokerSnowie's advice becomes stronger and more consistent across many different situations.

Detailed information about all major AI releases is available on the PokerSnowie Blog.

Recurrent Neural Network(RNN) are a type of Neural Network where the output from previous step are fed as input to the current step. In traditional neural networks, all the inputs and outputs are independent of each other, but in cases like when it is required to predict the next word of a sentence, the previous words are required and hence there is a need to remember the previous words. Thus RNN came into existence, which solved this issue with the help of a Hidden Layer. The main and most important feature of RNN is Hidden state, which remembers some information about a sequence.

RNN have a “memory” which remembers all information about what has been calculated. It uses the same parameters for each input as it performs the same task on all the inputs or hidden layers to produce the output. This reduces the complexity of parameters, unlike other neural networks.

How RNN works

The working of a RNN can be understood with the help of below example:

Example:

Suppose there is a deeper network with one input layer, three hidden layers and one output layer. Then like other neural networks, each hidden layer will have its own set of weights and biases, let’s say, for hidden layer 1 the weights and biases are (w1, b1), (w2, b2) for second hidden layer and (w3, b3) for third hidden layer. This means that each of these layers are independent of each other, i.e. they do not memorize the previous outputs.

Now the RNN will do the following:

- RNN converts the independent activations into dependent activations by providing the same weights and biases to all the layers, thus reducing the complexity of increasing parameters and memorizing each previous outputs by giving each output as input to the next hidden layer.

- Hence these three layers can be joined together such that the weights and bias of all the hidden layers is the same, into a single recurrent layer.

- Formula for calculating current state:

where: - Formula for applying Activation function(tanh):

where: - Formula for calculating output:

Training through RNN

- A single time step of the input is provided to the network.

- Then calculate its current state using set of current input and the previous state.

- The current ht becomes ht-1 for the next time step.

- One can go as many time steps according to the problem and join the information from all the previous states.

- Once all the time steps are completed the final current state is used to calculate the output.

- The output is then compared to the actual output i.e the target output and the error is generated.

- The error is then back-propagated to the network to update the weights and hence the network (RNN) is trained.

Advantages of Recurrent Neural Network

- An RNN remembers each and every information through time. It is useful in time series prediction only because of the feature to remember previous inputs as well. This is called Long Short Term Memory.

- Recurrent neural network are even used with convolutional layers to extend the effective pixel neighborhood.

Disadvantages of Recurrent Neural Network

Neural Network C++ Code Example

- Gradient vanishing and exploding problems.

- Training an RNN is a very difficult task.

- It cannot process very long sequences if using tanh or relu as an activation function.

Artificial Neural Network Examples

Recommended Posts:

If you like GeeksforGeeks and would like to contribute, you can also write an article using contribute.geeksforgeeks.org or mail your article to contribute@geeksforgeeks.org. See your article appearing on the GeeksforGeeks main page and help other Geeks.

Neural Network Examples

Please Improve this article if you find anything incorrect by clicking on the 'Improve Article' button below.